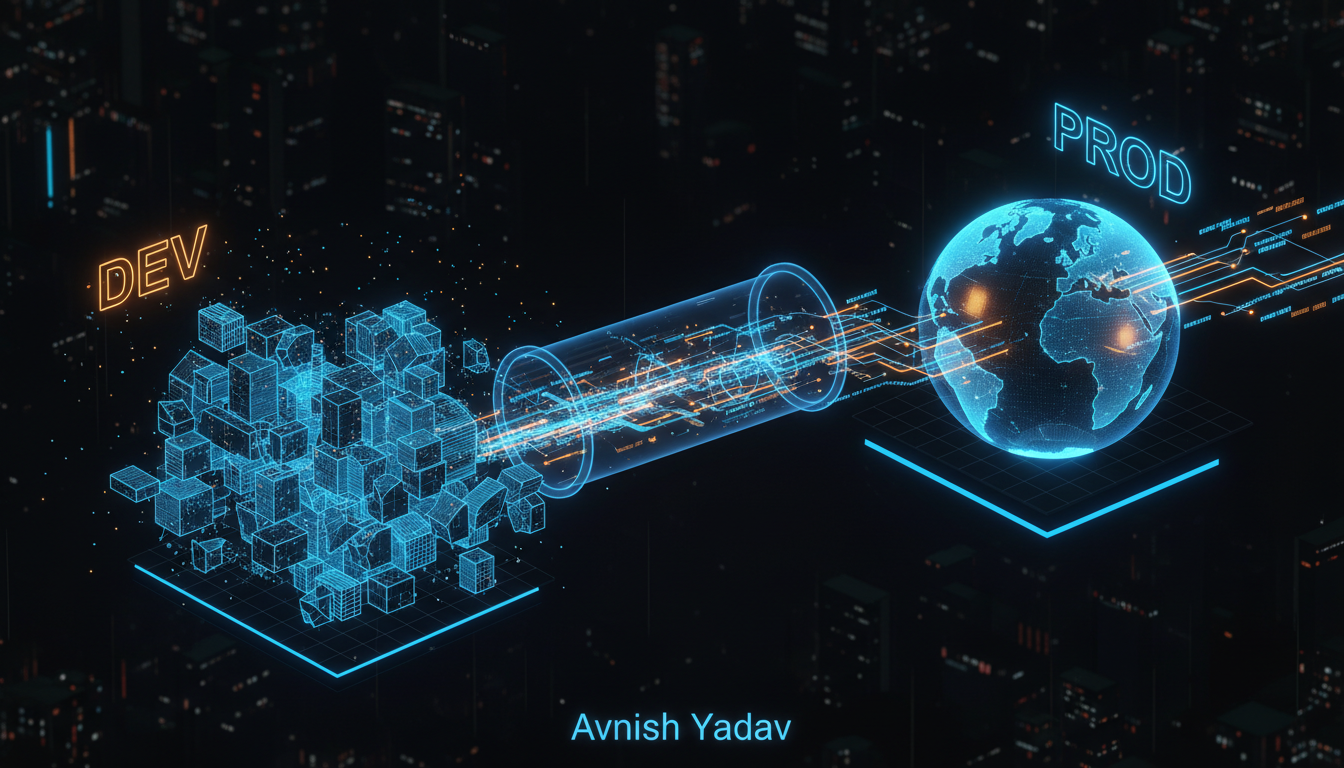

From Development to Production: A Builder's Guide to Frontend Deployment Strategies

Stop manually dragging folders to FTP. Learn how to architect robust deployment pipelines for React and modern frontend frameworks using Docker, AWS, and CI/CD.

The Localhost Illusion

There is a specific kind of anxiety that hits when you commit the final feature, close your terminal, and realize: Now I have to make this work for everyone else.

As engineers, we spend 90% of our time in the comfortable bubble of localhost:3000. We have hot-module reloading, unminified errors, and zero network latency. But shipping is the only feature that actually matters. Until a user can access your application via a secure HTTPS URL, you haven't built a product; you've built a prototype.

In the modern frontend landscape, deployment isn't just about putting files on a server. It's about cache invalidation, edge distribution, atomic builds, and environment management. Today, I'm going to walk you through the three tiers of frontend deployment strategies, ranging from developer-centric PaaS solutions to full-control containerization.

Strategy 1: The "Zero-Config" PaaS (Vercel / Netlify)

If you are building a micro-SaaS, a portfolio, or a JAMstack site, this is your starting line. Platforms like Vercel and Netlify have commoditized the DevOps pipeline. They wrap AWS Lambda and CloudFront in a developer-friendly UX.

How it works under the hood

When you push to Git, webhooks trigger a build on their servers. They detect your framework (Next.js, Vite, CRA), run the build command, and instantly distribute the static assets to a Content Delivery Network (CDN).

The Pros:

- Instant CI/CD: Preview deployments for every Pull Request are a game-changer for team velocity.

- Edge Functions: You can run server-side logic at the edge without provisioning a backend.

- Zero Maintenance: SSL and scaling are handled automatically.

The Cons:

- Vendor Lock-in: You are playing by their rules and pricing models.

- Cost at Scale: Bandwidth on these platforms is significantly more expensive than raw AWS/Azure costs.

Verdict: Use this for MVP validation and projects where speed of iteration beats infrastructure cost optimization.

Strategy 2: The Enterprise Standard (AWS S3 + CloudFront)

When you need total control over costs and security headers, or you're deploying within a strict VPC (Virtual Private Cloud), you move to the raw cloud primitives.

This strategy decouples storage from delivery.

- S3 (Simple Storage Service): Acts as the 'hard drive' where your HTML, CSS, and JS bundles live.

- CloudFront (CDN): Sits in front of S3, caching content at edge locations globally to reduce latency.

The Implementation

This is not drag-and-drop. You need to manage the upload process yourself. Here is a simplified breakdown of an automated pipeline using the AWS CLI:

# 1. Build the project

npm run build

# 2. Sync to S3 (Delete old files, set public read)

aws s3 sync build/ s3://my-app-bucket --delete

# 3. Invalidate CloudFront Cache (Crucial step!)

aws cloudfront create-invalidation --distribution-id E123456789 --paths "/*"The "React Router" Problem

A common pitfall here is client-side routing. If a user visits /dashboard directly, S3 will look for a folder named dashboard, fail to find it, and throw a 404. You must configure CloudFront error pages to redirect 403/404 errors back to index.html so React Router can handle the path internally.

Strategy 3: The "Containerized" Approach (Docker + Nginx)

This is my preferred method for complex applications, especially those that need to run in a Kubernetes cluster or a hybrid cloud environment. By containerizing your frontend, you ensure that the environment is exactly the same in development, staging, and production.

We use a Multi-Stage Docker Build. This keeps the final image tiny by discarding the Node.js runtime after the build is complete.

The Dockerfile

Save this as Dockerfile in your root:

# Stage 1: Build the React Application

FROM node:18-alpine as builder

WORKDIR /app

COPY package.json package-lock.json ./

RUN npm ci

COPY . .

RUN npm run build

# Stage 2: Serve with Nginx

FROM nginx:alpine

# Copy the build output to Nginx's html folder

COPY --from=builder /app/dist /usr/share/nginx/html

# Copy custom nginx config to handle client-side routing

COPY nginx.conf /etc/nginx/conf.d/default.conf

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]The Nginx Config

You need a custom `nginx.conf` to handle the SPA routing (similar to the S3 issue):

server {

listen 80;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

# Fallback to index.html for SPA routing

try_files $uri $uri/ /index.html;

}

}With this setup, your frontend is just another container. You can deploy it to AWS ECS, Google Cloud Run, or a $5 DigitalOcean droplet with ease.

Automating the Pipeline (GitHub Actions)

Manual deployments are forbidden. If you have to type a command to deploy production, you have a single point of failure (you). Let's automate the Docker build and push using GitHub Actions.

Create .github/workflows/deploy.yml:

name: Build and Push Docker Image

on:

push:

branches: [ "main" ]

jobs:

build-and-push:

runs-on: ubuntu-latest

steps:

- name: Checkout Code

uses: actions/checkout@v3

- name: Login to Docker Hub

uses: docker/login-action@v2

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Build and Push

uses: docker/build-push-action@v4

with:

context: .

push: true

tags: myuser/myapp:latestNow, every time you merge to `main`, a fresh production image is baked and ready to ship.

Which strategy should you choose?

Decision paralysis is real. Here is the framework I use to decide:

- Choose Managed PaaS (Vercel/Netlify) if: You are a solo founder, working on a side project, or an agency needing speed. The developer experience is unbeatable.

- Choose S3 + CloudFront if: You are cost-sensitive, expect massive traffic, and are already deep in the AWS ecosystem. It is the cheapest way to serve static files at scale.

- Choose Docker if: You need "Write Once, Run Anywhere." If your organization uses Kubernetes or requires strict governance over the OS level, containers are the standard.

Final Thoughts

The gap between a junior developer and a senior engineer often lies in the pipeline. Writing React code is fun, but ensuring that code reaches the user reliably, securely, and performantly is where the real engineering happens.

Stop deploying from your laptop. Build the pipeline. Ship the system.

Comments

Loading comments...